Here’s my first attempt to explain how RedditRecs works.

Core to RedditRecs is its data pipeline that analyzes Reddit data for reviews on products.

This is a gist of what the pipeline does:

- Given a set of products types (e.g. Air purifier, Portable monitor etc)

- Collect a list of reviews from reddit

- That can be aggregated by product models

- Such that the product models can be ranked by sentiment

- And have shop links for each product model

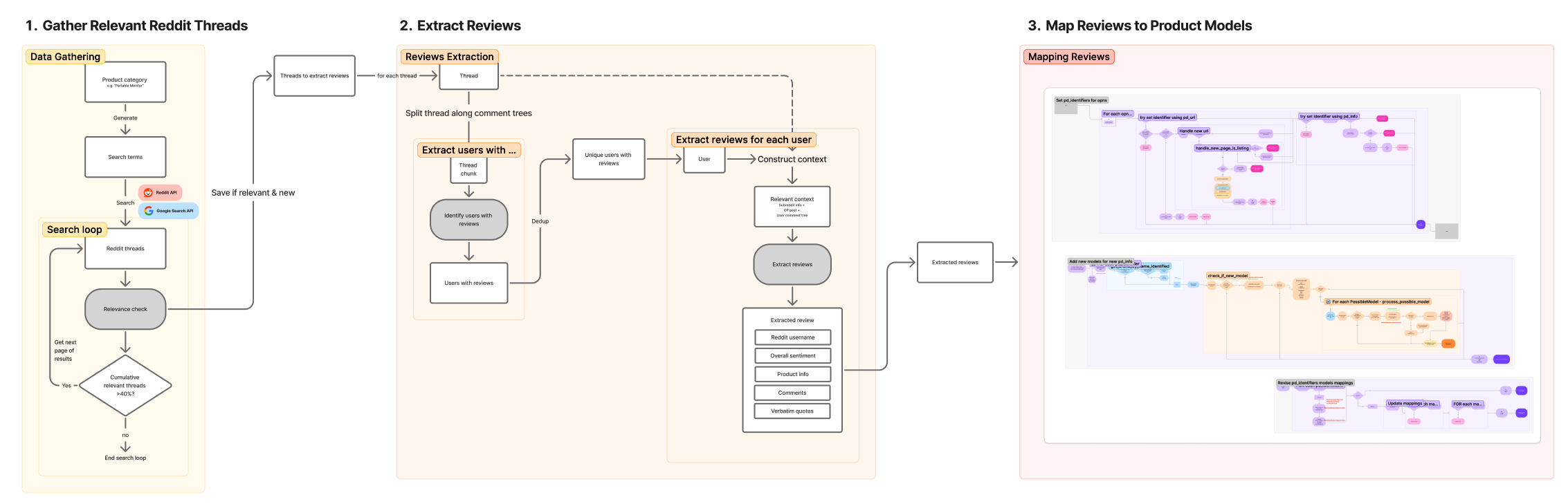

The pipeline can be broken down into 5 main steps:

- Gather Relevant Reddit Threads

- Extract Reviews

- Map Reviews to Product Models

- Ranking

- Manual Reconcillation

Step 1: Gather Relevant Reddit Threads

Gather as many relevant Reddit threads in the past year as (reasonably) possible to extract reviews for.

- Define a list of products types

- Generate search queries for each pre-defined product (e.g. Best air fryer, Air fryer recommendations)

- For each search query:

- Search Reddit up to past 1 year

- For each page of search results

- Evaluate relevance for each thread (if new) using LLM

- Save thread data and relevance evaluation

- Calculate cumulative relevance for all threads (new and old)

- If >= 40% relevant, get next page of search results

- If < 40% relevant, move on to next search query

Step 2: Extract Reviews

For each new thread:

- Split thread if its too large (without splitting comment trees) 1

- Identify users with reviews using LLM 2

- For each unique user identified:

- Construct relevant context (subreddit info + OP post + comment trees the user is part of)

- Extract reviews from constructed context using LLM

- Reddit username

- Overall sentiment

- Product info (brand, name, key details)

- Product url (if present)

- Verbatim quotes

Step 3: Map Reviews to Product Models

Now that we have extracted the reviews, we need to figure out which product model(s) each review is referring to.

This step turned out to be non-trivial. It’s too complex to lay out the steps.

But what will help give you an idea is to lay out the challenges I faced and the approach I took.

Handling informal name references

The first challenge is that there are many ways to reference one product model:

- A redditor may use abbreviations (e.g. “GPX 2” gaming mouse refers to the Logitech G Pro X Superlight 2)

- A redditor may simply refer to a model by its features (e.g. “Ninja 6 in 1 dual basket”)

- Sometimes adding a “s” behind a model’s name makes it a different model (e.g. the DJI Air 3 is distinct from the DJI Air 3s), but sometimes it doesn’t (e.g. “I love my Smigot SM4s”)

Related to this, a redditor’s reference could refer to multiple models:

- A redditor may use a name that could refer to multiple models (e.g. “Roborock Qrevo” could refer to Qrevo S, Qrevo Curv etc")

- When a redditor refers to a model by it features (e.g. “Ninja 6 in 1 dual basket”), there could be multiple models with those features

So it is all very context dependent. But this is actually a pretty good use case for an LLM web research agent.

So what I did was to have a web research agent research the extracted product info using Google [^3] and infer from the results all the possible product model(s) it could be.

Each extracted product info is saved to prevent duplicate work when another review has the exact same extracted product info.

Distinguishing unique models

But theres another problem.

After researching the extracted product info, let’s say the agent found that most likely the redditor was referring to “model A”. How do we know if “model A” corresponds to an existing model in the database?

What is the unique identifier to distinguish one model from another?

Some approached I considered:

- Model codes as unique identifier. The problem is that not all products have model codes and sometimes the same product can have multiple model codes due to region or minor differences like color.

- Brand’s official product url as unique identifier. The assumption is that the official website would have the definitive list of distinct models, and the most comprehensive and accurate information that could be used to match with the extracted product to determine the possible models. However, many brand websites are difficult to scrape. I did not want to depend on an approach which meant building and maintaining a scraper that could scrape all brand sites.

The approach I ended up with is to use the model name and description (specs & features) as the unique identifier:

- To determine if an identified model exists in my database, I would take the model name and description found from the research and compare them with the names and descriptions of the existing models in my database.

- This also enabled me to accurately map shop links from any platforms (amazon, walmart, official site etc) to the models, as long as the shop link had sufficient info about the product model

- For efficiency:

- I use string matching on the name first

- If the name doesn’t match, then I use LLMs to compare name and description

- I determine the brand first (easier) so that I only compare the models within the brand instead of all models

Step 4: Ranking

The ranking is based on aggregated user sentiment from Reddit. It aims to show which Air Purifiers are the most well reviewed.

Key ranking factors:

- The number of positive user sentiments

- The ratio of positive to negative user sentiment

- How specific the user was in their reference to the model

Scoring mechanism:

- Each user contributes up to 1 “vote” per model, regardless of no. of comments on it.

- A user’s vote is less than 1 if the user does not specify the exact model - their 1 vote is “spread out” among the possible models.

- More popular models are given more weight (to account for the higher likelihood that they are the model being referred to).

Score calculation for ranking:

- I combined the normalized positive sentiment score and the normalized positive:negative ratio (weighted 75%-25%)

- This score is used to rank the models in descending order

Step 5: Manual Reconciliation

I have an internal dashboard to help me catch and fix errors more easily than trying to edit the database via the native database viewer (highly vibe coded)

This includes a tool to group models as series.

The reason why series exists is because in some cases, depending on the product, you could have most redditors not specifying the exact model. Instead, they just refer to their product as “Ninja grill” for example.

If I do not group them as series, the rankings could end up being clogged up with various Ninja grill models, which is not meaningful to users (considering that most people don’t bother to specify the exact models when reviewing them).

Tech Stack & Tools

Code

- Python (for script)

- HTML, Javascript, Typescript, Nuxt (for frontend)

Database

- Supabase

LLM APIs

- OpenAI (mainly 4o and o3-mini)

- Gemini (mainly 2.5 flash)

Data APIs

- Reddit PRAW

- Google Search API

- Amazon PAAPI (for amazon data & generating affilaite links)

- BrightData (for scraping common ecommerce sites like Walmart, BestBuy etc)

- FireCrawl (for scraping other web pages)

- Jina.ai (backup scraper if FireCrawl fails)

- Perplexity (for very simple web research only)

-

The purpose is to reduce the context the LLM needs to reason over because generally performance decrease with context size (common pattern you’ll see throughout). Comment trees are not split to preserve context in reply chains. ↩︎

-

Notice we’re not going straight into review extraction. This is mainly to keep the instructions for each LLM call straightforward to avoid context stuffing. Besides, the user may have other comments stranded in other chunks, and we want to include that when evaluating the user’s overall sentiment. ↩︎